We usually store a lot of files during our work. Fast internet and data availability support this very much. Unfortunately, a very large part of the data we collect is unnecessary or out of date after some time. Some people view their datasets and periodically delete unnecessary things.

What about the cloud? Since the price for storage has become very low, many of us collect data not only at home, but in the cloud. Why?

Because it is cheap, convenient and we have access to it from anywhere in the world 🙂

Nowadays, we need to filter the data well to find something that is useful to us. If we don’t, we’ll be drowned in a flood of information. With the stubbornness of a maniac, we collect the necessary data, keep it, and after some time it becomes unnecessary and obsolete.

The same applies to logs stored in the cloud. Storing them is also cheap and convenient, only … only logs also have validity. Let’s not keep data longer than we have to. For some systems it will be 30 days, for others it will be 90 days, and for some systems it will be a year or several years, if we are required to do so by law and regulations.

If you have any data in AWS that you would like to automatically delete after a certain period of time, then this article is for you !!

Automatic deletion of data from the entire S3 bucket

On the AWS (Amazon Web Service) platform, we can easily automatically delete data from our S3 bucket. We open Amazon S3 and select one bucket from the list, on which we want to enable automatic deletion of files after a specified time.

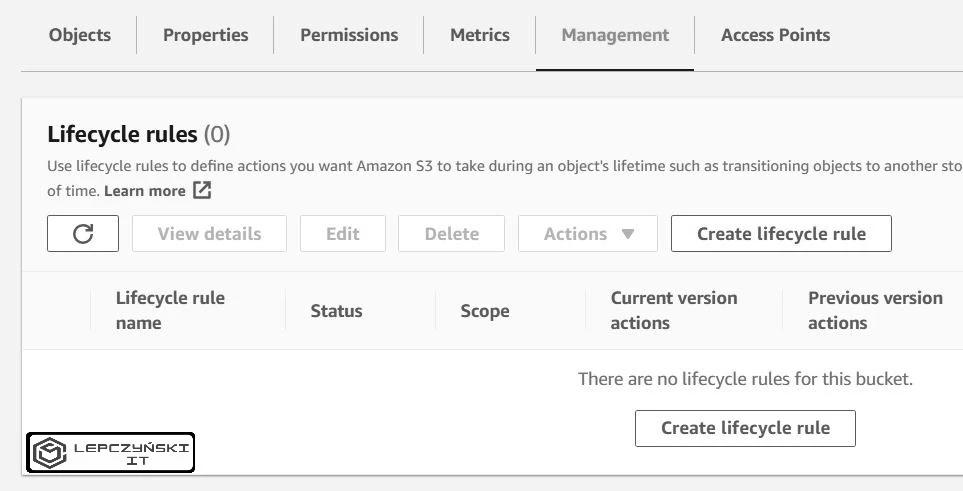

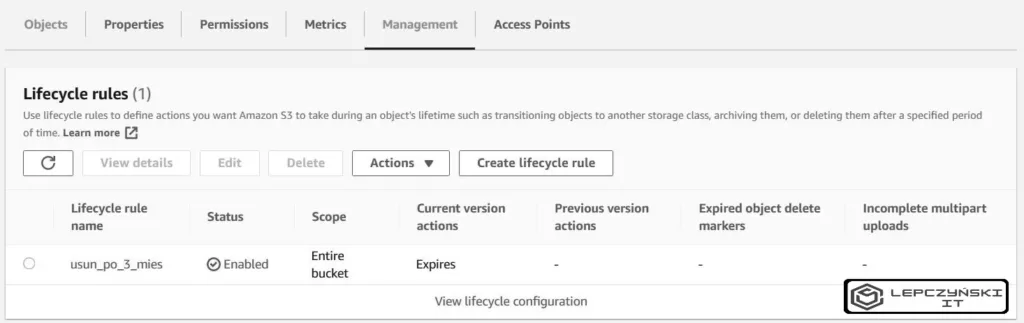

Go to Management and click Create lifecycle rule.

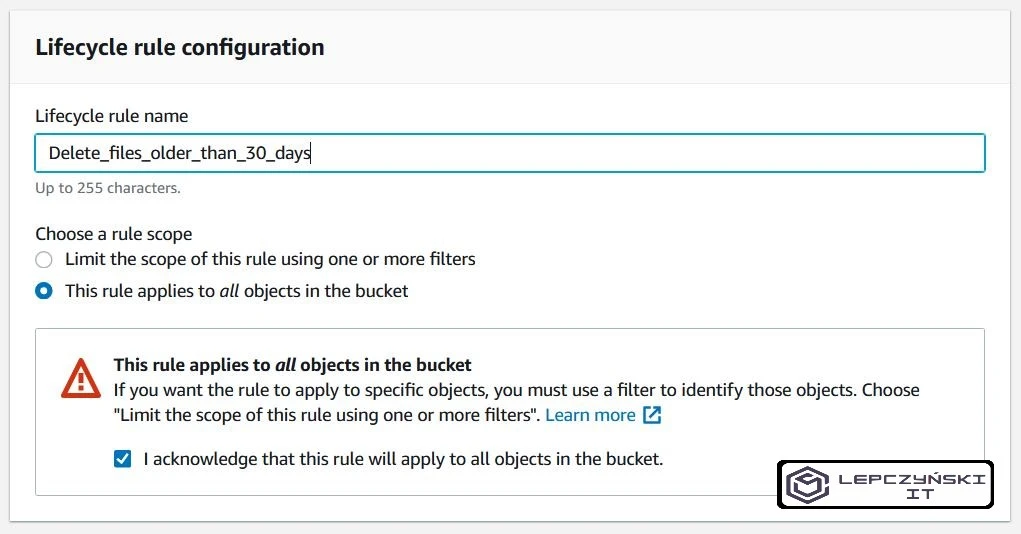

We give the name of our rule. Select the option saying that our changes are to apply to all objects and select the checkbox that appears. We must be aware that all objects older than the number of days listed below will be deleted.

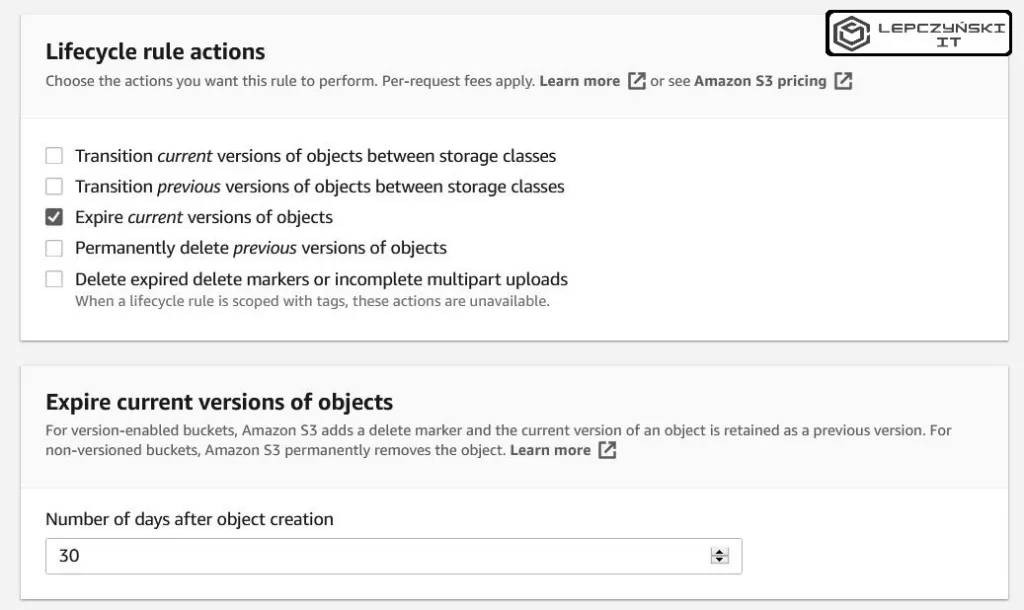

If we want the files to be deleted after 30 days, select the “Expire current versions of objects” option and enter the number of days after which the files will be deleted.

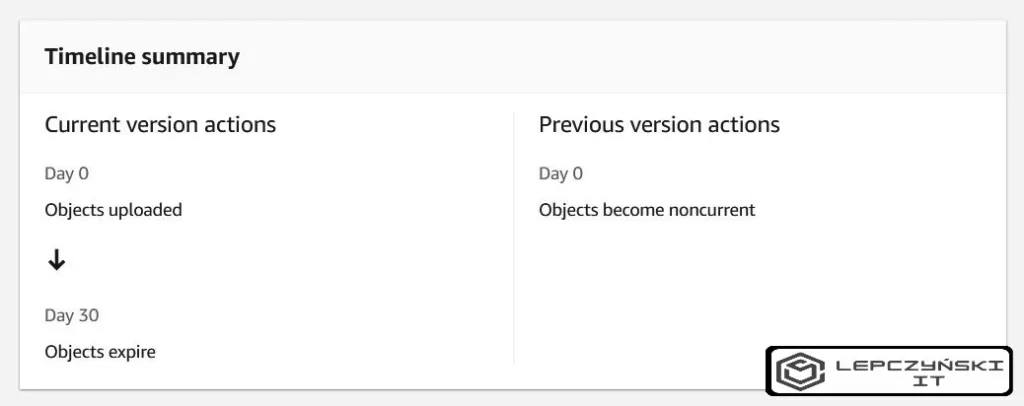

In summary, we should see something similar to the picture below. When everything is correct, click on Create rule and our automatic file deletion rule will be ready.

Automatic deletion of data from one folder from S3 bucket

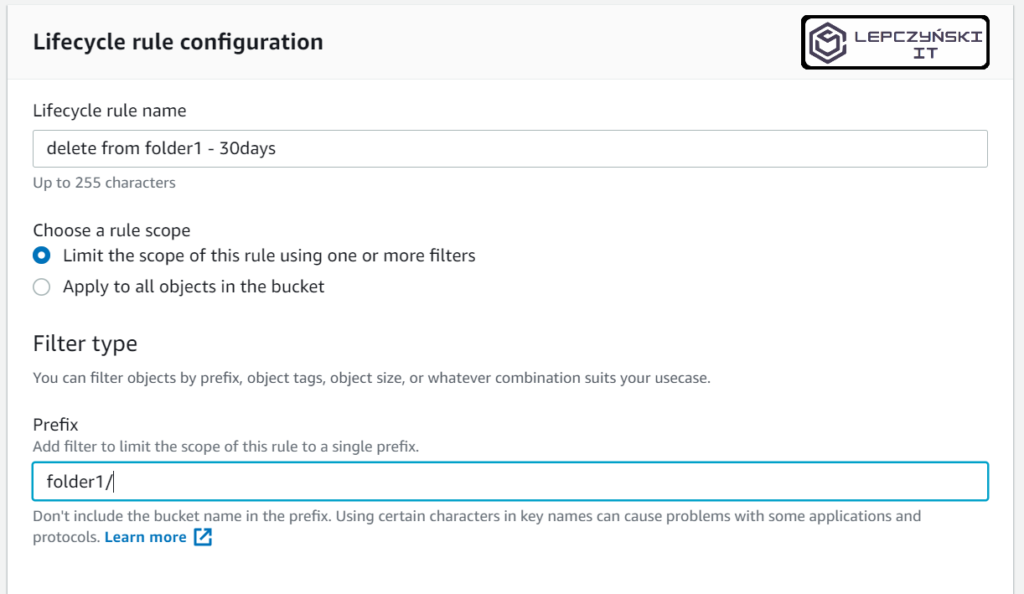

Everything works nicely, but what if we wanted to have different retention set for each group of files? We won’t be creating a few buckets for every flu. Managing so many buckets would be a nightmare. There is an easier way. We can limit the deletion of files to a specific folder or subfolder only.

I strongly recommend that you check this option on a test bucket if you are just learning, or make a copy of the bucket you are implementing it on, just in case.

If you make a mistake, you could lose your data.

If you are sure of what you are doing then you can limit file deletion to a specific folder only.

The whole thing is very similar as before, only the difference is that this time you select the “Limit the scope of this rule using one or more filters” option and enter the name of the folder with / to which the rule is to be created for example ‘folder1/‘.

Summary of Lifecycle rules

These are the basics, we can extend our automation much more. The basics will help you avoid unnecessary expenses and keep order by automatically delete old logs or outdated data from AWS S3 .

If the article has given you some value, or you know someone who might need it, please share it on the Internet. Don’t let him idle on his blog and waste his potential.

I would also be pleased if you write a comment.

If you do not like to spend money unnecessarily, I invite you to read other articles on saving money in the cloud.

How to delete files that are older than x days. Can we use any API or script for that?

Share an example with explanation

The simplest way is to use lifecycle rule.

There is no single command to delete a file older than x days in API or CLI.

For example, you can mount S3 as a network drive (for example through s3fs) and use the linux command to find and delete files older than x days.

You can also first use “aws ls” to search for files older than X days, and then use “aws rm” to delete them.

There are many ways. You can find some examples on stackoverflow but I haven’t tested them 😉 https://stackoverflow.com/questions/50467698/how-to-delete-files-older-than-7-days-in-amazon-s3

Your use of prefixes is incorrect. What you have written here will achieve nothing.

Sorry for this. You’re right, I forgot to add ‘/’ after the end of directory names. But when I tested it it worked fine.

To be consistent with the documentation I will correct the article. Thank you very much for paying attention

Hi can we also delete an object which in s3 deep archive class before 180 using lifecycle

I don’t test this on Glacier deep archive, but I found in documentation that you can use “S3 Lifecycle management for automatic migration of objects”

By the way, there is information about Glacier deep archive -“Lowest cost storage class designed for long-term retention of data that will be retained for 7-10 years”

https://aws.amazon.com/s3/storage-classes/

Very useful tutorial.

Id like to know how i can delete only .zip files?

I i have different types of archives and i want to delete only old .zip files.

When i want delete files with specyfic extension in Media Store I don’t use:

"path": [{"prefix": "folder1/"}

],

Instead, I use:

"path": [{"wildcard": "folder1/*.zip"}

],

In this example I delete all files with ‘.zip’ extension from ‘folder1’.

I just don’t know if you can use it in S3.

Remember that it is better to test any changes in a test environment.

I set lifecycle ruse for my entire s3 bucket and delation set after next day file will be deleted automatically but next day file not deleted why?

Amazon S3 runs lifecycle rules once every day. After the first time that Amazon S3 runs the rules, all objects that are eligible for expiration are marked for deletion. You’re no longer charged for objects that are marked for deletion.

However, rules might take a few days to run before the bucket is empty because expiring object versions and cleaning up delete markers are asynchronous steps. For more information about this asynchronous object removal in Amazon S3, see Expiring objects.

Can I clean my s3 bucket every 2 hours, by life cycle rules that can’t be done I suppose, is there any other way ?

You can use for example cli command from cron “

aws s3 rm s3://bucket-name/example --recursive“. In this article you find “5 ways to remove AWS S3 bucket”.Can i remove existing files older than 30 days using this approach?

This is exactly the example I described, the files are automatically deleted after 30 days. You can set the time as you like.

This is very useful article . can we create the one rule for delete the 30days old file that is inside sub folders like

Bucket-name\ServerName\DatabaseName\FullBackup\

Bucket-name\ServerName\DatabaseName\DiffBackup\

Bucket-name\ServerName\DatabaseName\LogBackup\

How to give the prefix name

Don’t add the name of the S3 bucket in the prefix and you definitely need use “/” instead “\” and it should work better.

Today I publish my new tutorial about S3 lifecycle rule on YouTube – https://www.youtube.com/watch?v=U9bhFf3q6YI

I show in a video how to create rules that delete data from only one folder in S3 bucket after 30, 90 and 365 days.

Thanks for this article. It sounds like I need to be using the lifecycle options. Originally I was under the impression that I could use the –expires flag on cp command. I thought that would copy files to the bucket and set an expiry date at individual object level instead of at bucket or folder level. Sounds like that is not true.

If I understand it correctly, the expires flag is used for something else. I think this applies to the place(server) where the command is run:

--expires (string) The date and time at which the object is no longer cacheable.I think Lifecycle will be a good choice

Hi where do I implement this “wildcard”? Since there is no wildcard in Lifecycle Configuration.

I have checked and unfortunately it can only be used with MediaStore. https://docs.aws.amazon.com/mediastore/latest/ug/policies-object-lifecycle-components.html

It turns out that if you need to delete files with a certain extension, it is probably best to use the lambda function.

cli example:

aws s3 rm s3://test-bucket1/ –recursive –dryrun –exclude “*” –include “*.json”

Hello,

Thanks for the information, but is there a way to customize the lifecycle trigger, like from Midnight UTC to 4 pm UTC?

Hi and thanks for answering for the my question regarding on the wildcard but is there a way how to change the lifecycle run or trigger? I know it always run at Midnight UTC but can I change that?

I don’t think it can be changed. At least I did not find such information anywhere.

In documentation you can find “The date value must conform to the ISO 8601 format. The time is always midnight UTC.“

Oh thank you this is a very valuable information

Thanks a lot for sharing this. Is this possible to set a lifecycle policy for multiple folders or i need to set multiple policy for each folders and subfolders?

You can use only single prefix in rule.

If you have many prefixes you must copy the rule and change the prefix.

You can find more info in documentation.

Of course, for example, for all subfolders in s3_folder, you can set only one main rule

s3_folder/, you don’t need to set a rule for each subfolder, such ass3_folder/folder1/,s3_folder/folder2/,s3_folder/folder3/…Hello,

I want to generate a monthly report of the data availability on a specific day.

How can we do this , Are they any configurations or any other ways to achieve it ?

Thanks in Advance ..!!

It’s a bit unrelated to this article, but you can use ‘AWS Config’ for AWS resources. Maybe someday I will write an article about AWS Config, for now you can read about it on the AWS website https://aws.amazon.com/config/

If you only mean S3 then you can enable logging of what is happening on S3. But if you want to monitor something specific on S3, it’s probably best to use a lambda, or Athena, or an external tool. You can take a look on this.

Thank you so much for your valuable input ..!

i need your help, why is that the testfile i upload in the folder is not deleted after 1 day? i set a rule for my specific sample test folder and i set it for 1 day expiration after automtic deletion. why is that the test file is not deleted? thank you

I can’t see your configuration so it’s hard to say, maybe there is some syntax or configuration error, maybe something small. Or maybe everything is ok and you just have to wait.

Amazon S3 runs lifecycle rules once every day. After the first time that Amazon S3 runs the rules, all objects that are eligible for expiration are marked for deletion. You’re no longer charged for objects that are marked for deletion.

However, rules might take a few days to run before the bucket is empty because expiring object versions and cleaning up delete markers are asynchronous steps. For more information about this asynchronous object removal in Amazon S3, see Expiring objects.

hello sir. the file is successfully deleted, there is some sort of delay but the files is already deleted. but my problem is after all the file has been delete, the folder or object i used for the lifecycle rule is also deleted. how to retain the folder that i used after all the files has been deleted? thank you so much

You can create a folder with

put_object:client = boto3.client('s3')response = client.put_object(Bucket='test-745df33637-source-lambda-copy', Body='', Key='test-folder/')

I checked it works, but you’d better add extra permissions to the lambda function to create resources in S3.

For example

I have bucket name abc with folders and many sub folders like below

/Folder1/folder2/folder3/objects

Folder1 and folder2 also contains some objects. My aim is to delete objects under folder3 alone and without deleting folder3. How to achieve it using prefix

I tried below prefixes

Folder1/folder2/folder3/

/Folder1/folder2/folder3/*

/Folder1/folder2/folder3

But most of the time, folder3 also getting deleted with the objects inside it

Hi, When you create a folder in Amazon S3, S3 creates a 0-byte object with a key that’s set to the folder name that you provided. If the object is deleted and there are no files using that path, then the folder will also disappear.

Need to delete files older than 100days on non-versioning bucket.

Option with lifecycle type “Expire current versions of objects” do not work.

I modified lifecycle to “Permanently delete noncurrent versions of objects”

Days after objects become noncurrent – 100

However checking old object properties does not shot its expire date:

“Expiration rule

You can use a lifecycle configuration to define expiration rules to schedule the removal of this object after a pre-defined time period.

–

Expiration date

The object will be permanently deleted on this date.

-”

I need to wait till midnight – then rule is executed (so bad AWS so bad).

Still it should work for non-versioning bucket:

Non-versioned bucket – The Expiration action results in Amazon S3 permanently removing the object.

from doc:

https://docs.aws.amazon.com/AmazonS3/latest/userguide/intro-lifecycle-rules.html?icmpid=docs_amazons3_console#intro-lifecycle-rules-actions

PS. AWS CLI will not show execution time of a lifecycle rule – other way to get it?

Why do you think that “Expire current versions of objects” don’t work??

Amazon S3 runs lifecycle rules once every day. After the first time that Amazon S3 runs the rules, all objects that are eligible for expiration are marked for deletion. You’re no longer charged for objects that are marked for deletion.

However, rules might take a few days to run before the bucket is empty because expiring object versions and cleaning up delete markers are asynchronous steps. For more information about this asynchronous object removal in Amazon S3, see Expiring objects – https://docs.aws.amazon.com/AmazonS3/latest/userguide/lifecycle-expire-general-considerations.html

Its not applying lifecycle because version of object is NULL>

Why – because at the beginning the bucket was non-versioned. Even I change to be versioning – ID of object is still NULL.

DOC:

https://docs.aws.amazon.com/AmazonS3/latest/userguide/troubleshooting-versioning.html

“When versioning is suspended on a bucket, Amazon S3 marks the version ID as NULL on newly created objects. An expiration action in a versioning-suspended bucket causes Amazon S3 to create a delete marker with NULL as the version ID. In a versioning-suspended bucket, a NULL delete marker is created for any delete request. These NULL delete markers are also called expired object delete markers when all object versions are deleted and only a single delete marker remains. If too many NULL delete markers accumulate, performance degradation in the bucket occurs.”

Yes you are right. Versioning can be complicated sometimes.

It’s best to follow good practices, sometimes it’s best to contact AWS support.

Comments are closed.