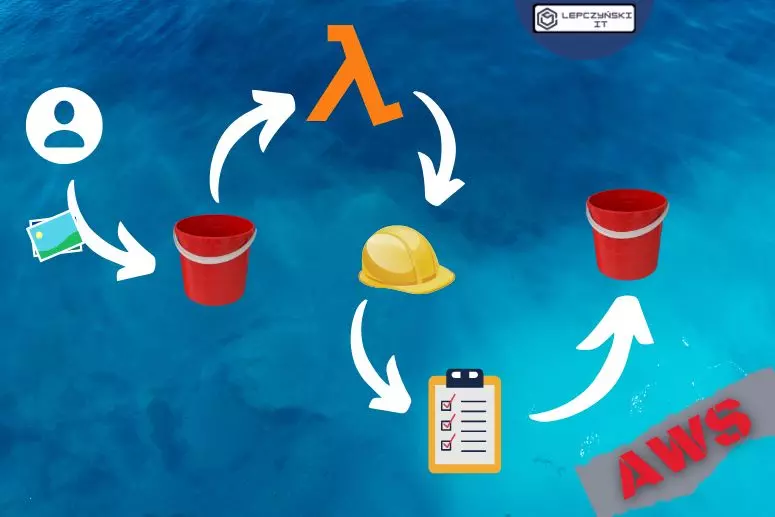

From this article you will learn how the lambda function can automatically transfer files from one S3 bucket to another S3 bucket. The source file will be automatically deleted when the copy is finished. The function will work automatically. When someone adds a file to a special folder in bucket_1, it will be automatically moved to another folder in bucket_2 and removed from the first one.

From this article you will learn how to create:

- S3 bucket with appropriate permissions,

- IAM policy for the IAM role,

- IAM role and assign to it the appropriate policies,

- Lambda function and assign the appropriate IAM role to it,

- a mechanism to allow the lambda function to run automatically

1) S3 bucket

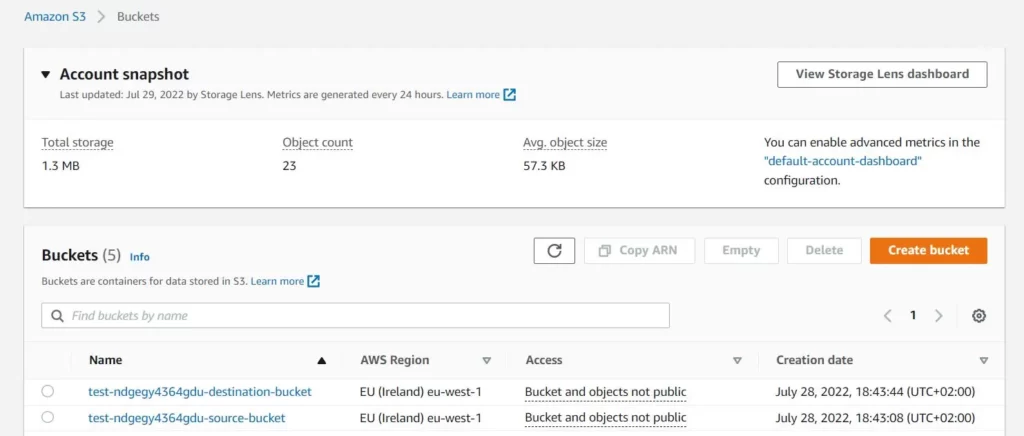

I will create 2 separate S3 buckets. The first will be the source, from there the lambda function will copy files. The second will be the destination, there the files will be copied.

Just click ‘Create bucket’ and give it a name. Make sure you select a correct region. It is best not to enable public access without the need to ‘Block all public access’. For more on S3, see article CDN and 2 ways to have a static website in AWS – blog (lepczynski.it).

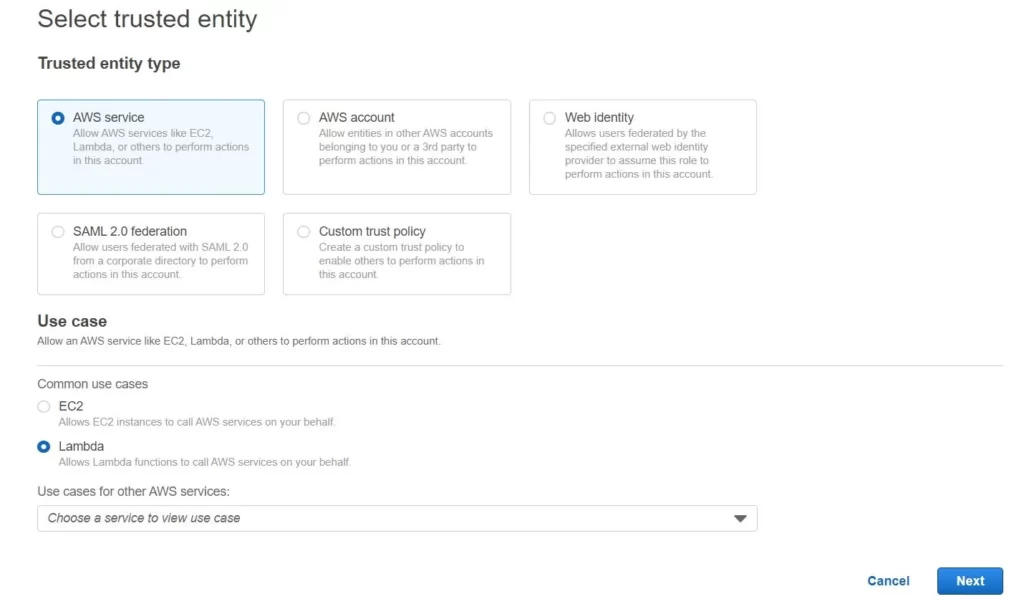

2) IAM role for Lambda

First, create a new IAM role for the lambda function. Appropriate policies should be added to the function. For greater security, it is best to grant only the minimum permissions needed to perform the task.

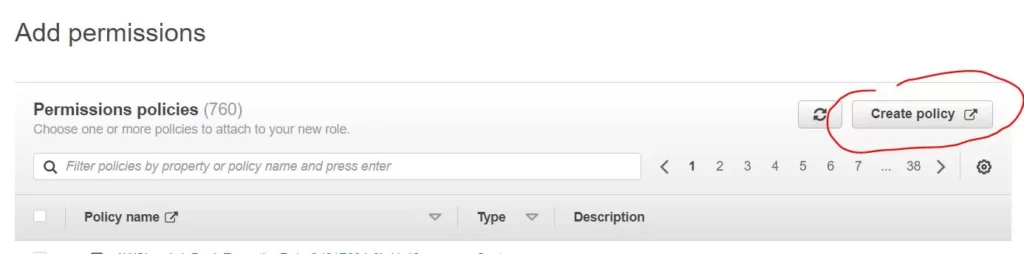

The best way is to create a new policy. Go to the JSON tab and add what you need. AWS Policy Generator is also an interesting solution.

Below are the minimum permissions needed to move and delete objects in the source bucket and to save objects in the target bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:DeleteObject"

],

"Resource": "arn:aws:s3:::test-ndgegy4364gdu-source-bucket/images/*"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": [

"arn:aws:s3:::test-ndgegy4364gdu-source-bucket",

"arn:aws:s3:::test-ndgegy4364gdu-source-bucket/images/*"

]

},

{

"Sid": "VisualEditor2",

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::test-ndgegy4364gdu-destination-bucket/images/*"

}

]

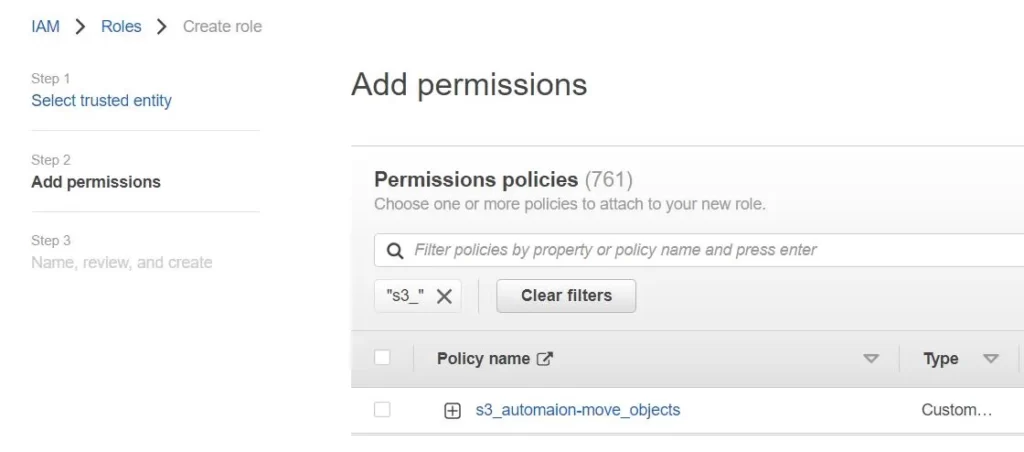

}When you create a policy, you search for its name and add it to the role:

Then you move on, give a super cool name for the new IAM role and you can move on to the next point.

More about IAM roles and policies, you can find in the article How to automatically start EC2 in the AWS cloud? – devops blog (lepczynski.it)

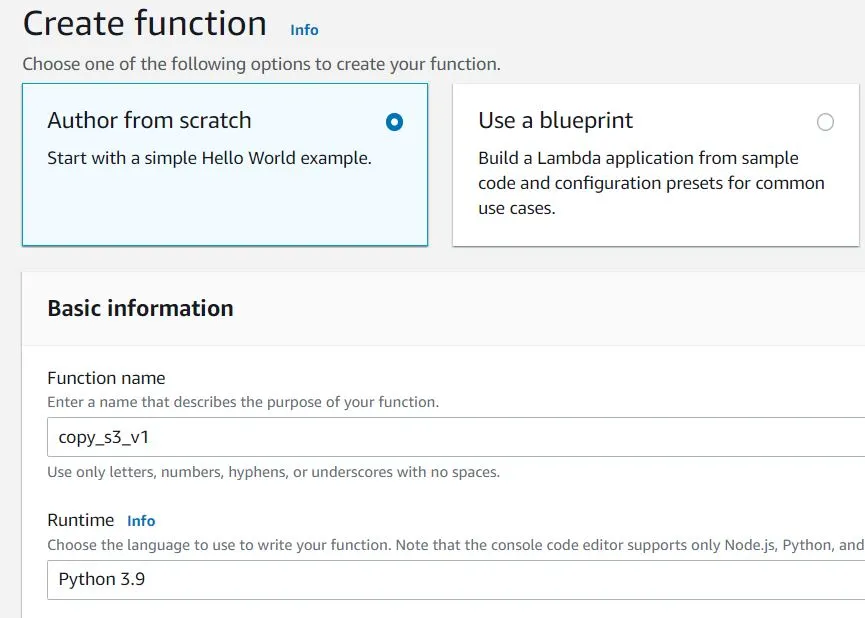

3) Lambda function

How to create a lambda function

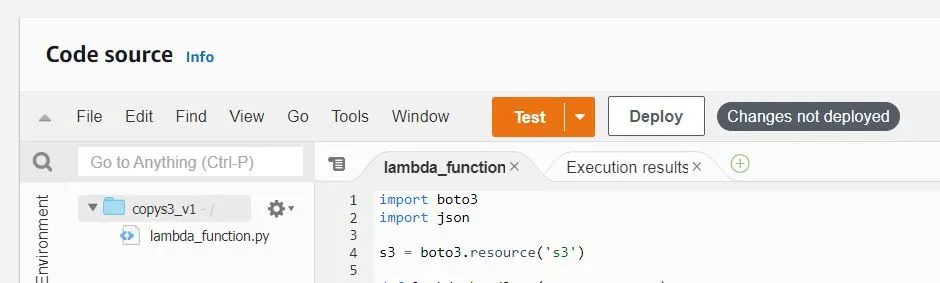

Okay, now we come to the most important part, the creation of Lambda functions. I’m doing this from scratch, using Python 3.9 for this.

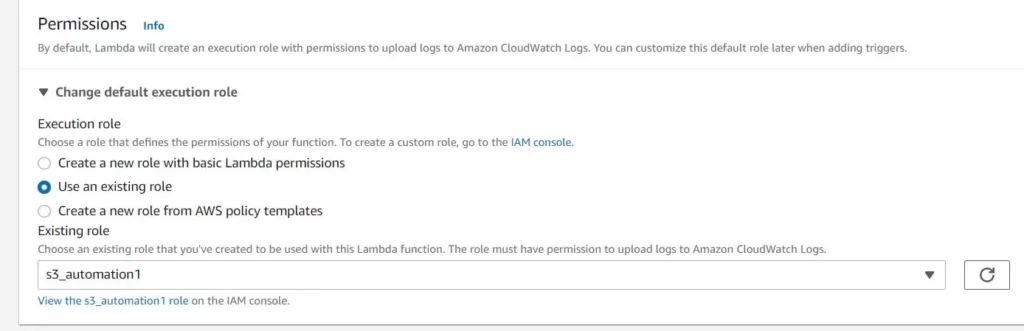

Remember to use the IAM role created in point number 2:

import boto3

import json

s3 = boto3.resource('s3')

def lambda_handler (event, context):

bucket = s3.Bucket('test-ndgegy4364gdu-source-bucket')

dest_bucket=s3.Bucket('test-ndgegy4364gdu-destination-bucket')

print(dest_bucket)

print(bucket)

for obj in bucket.objects.filter(Prefix='images/',Delimiter='/'):

dest_key=obj.key

print(dest_key)

print('copy file ' + dest_key)

s3.Object(dest_bucket.name, dest_key).copy_from(CopySource= {'Bucket': obj.bucket_name, 'Key': obj.key})

print('delete file from source bucket ' + dest_key)

s3.Object(bucket.name, obj.key).delete()test-ndgegy4364gdu-source-bucket – this is my source bucket from which I copy files (remember to rename it)

test-ndgegy4364gdu-destination-bucket – this is the destination bucket where the files will appear (remember to rename it)

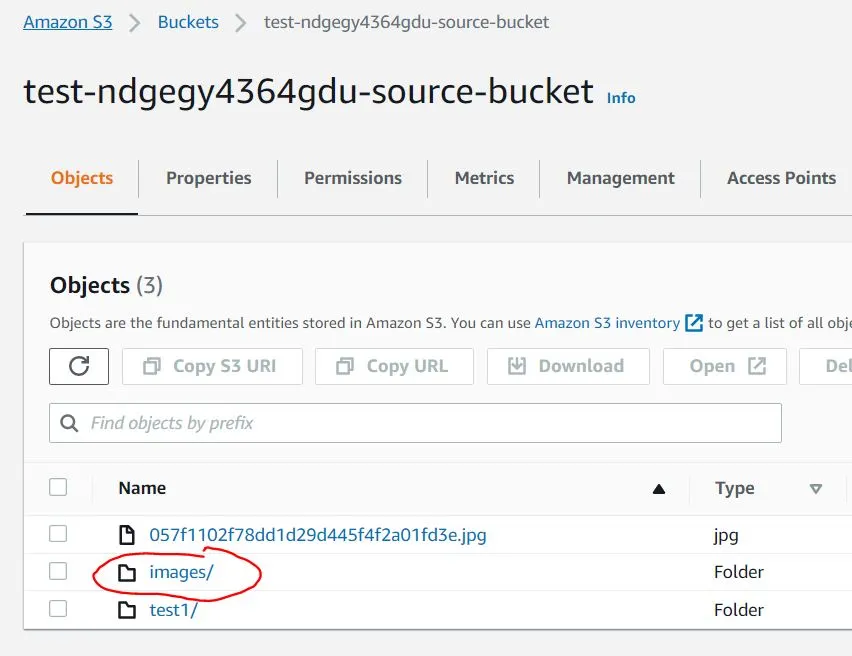

I copy the files from the images folder in test-ndgegy4364gdu-source-bucket to the images folder in test-ndgegy4364gdu-destination-bucket. If you change Prefix='images/' to Prefix='' then all files from one bucket to the other will be copied.

You can modify the lambda function as you wish. I wanted to show that not all files from S3 have to be copied, it could just be a specific folder.

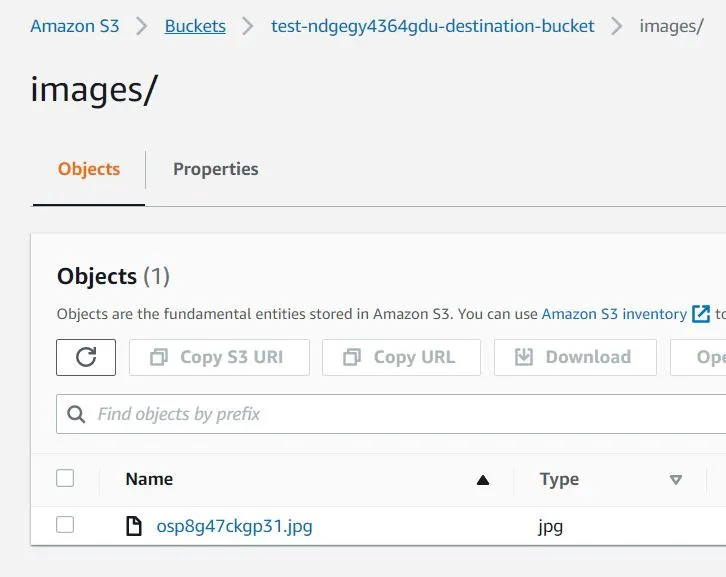

Make sure the function is working properly by clicking deploy and test. If you have done everything right, the files should be moved from to the target bucket and removed from the source bucket.

How to add automation to S3

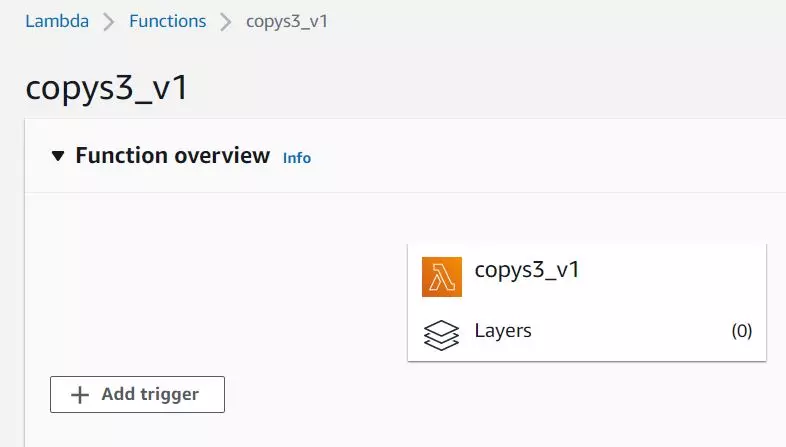

Once you have made sure that everything is working correctly, then you can add the automation. In ‘Function overview‘, you click Add trigger:

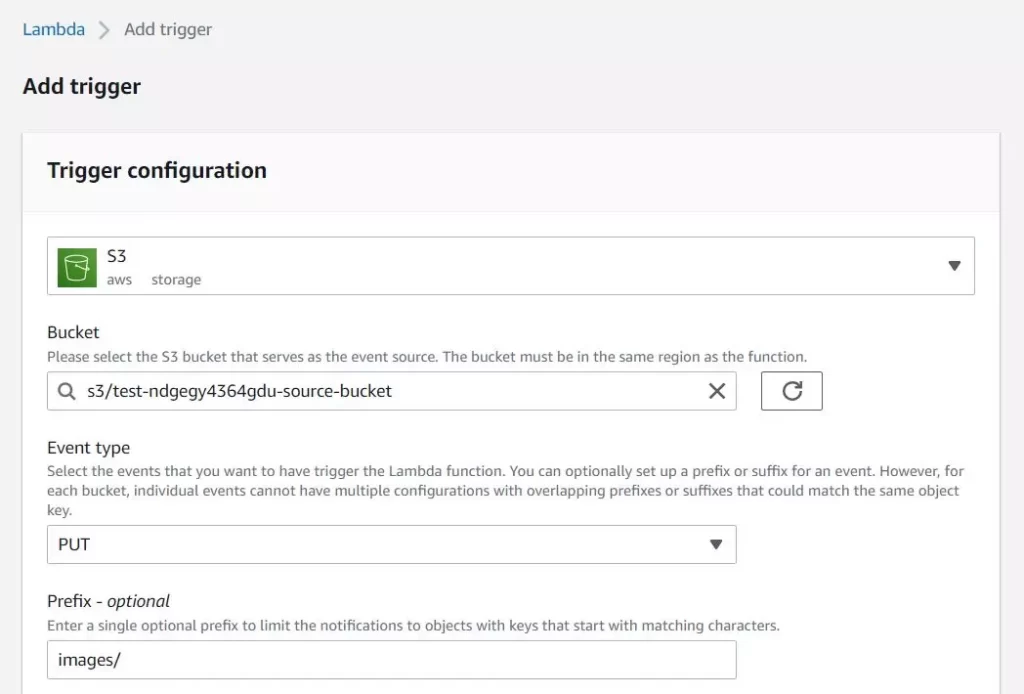

Select S3 from the list and the name of your source bucket, that is where you will be adding files.

You set the event type to PUT because you only want the function to be triggered when objects are added.

Very important to add a prefix if you only want to copy files from a specific folder. Otherwise the function will be called every time anything is added to the bucket even in a different folder.

You click add and that’s pretty much the end of it. You can test the function by adding files to S3 via the UI, or using cli for example:

aws s3 cp ./directory s3://test-ndgegy4364gdu-source-bucket/images --recursiveSummary

It’s worth getting to know the lambda function even if you’re not a dev, it can make life a lot easier.

The above article was just the beginning of an adventure into S3 automation using Lambda functions. Now you can add a few lines of code to the function that make it, for example, additionally resize images.

In the future, perhaps I will write an article on how files added to S3 can not only be moved to another bucket, but also, for example, automatically added to a web page without any additional action. All the user has to do is add the files to S3 and they will automatically appear on the static web page. If you are interested in the topic of static websites created easily in AWS, I encourage you to read CDN and 2 ways to have a static website in AWS – blog (lepczynski.it)