In this article you will learn how to save files to S3 and read them using the lambda function and boto3 in the AWS cloud. You’ll also find information about what permissions I’ve given the lambda function to do these things and nothing else. It is good practice to always grant only minimal permissions. It is not worth doing solutions based on admin roles. You should adjust permissions for each function and project individually.

In the corporate world, it is a valued skill that increases security and builds the trust of superiors, because they do not have to correct the code after you, and the entire project does not stretch over time.

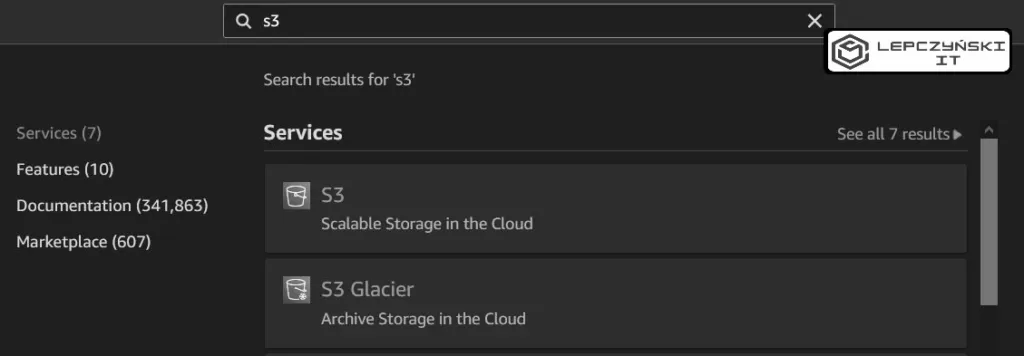

S3 bucket

The first step is to create an S3 bucket that will be used to read and write files. My bucket will be called “save-data-from-lambda“.

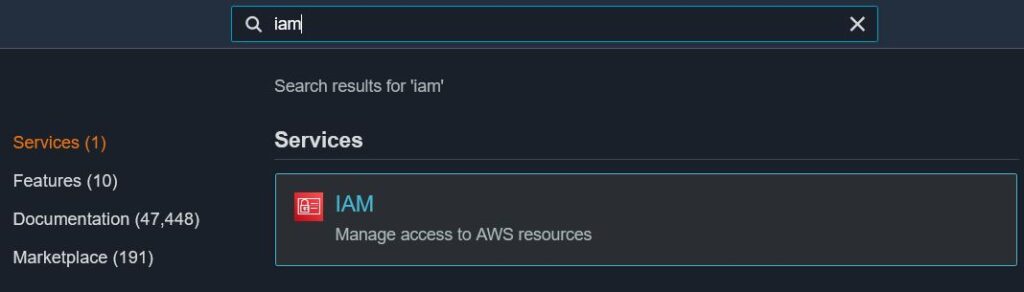

IAM role

Okay, let’s get down to the important stuff. Now you create a new IAM role and assign the appropriate IAM policy to perform the actions you need. My policy will allow reading and writing files in S3:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListObjects",

"s3:ListObjectsV2",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::save-data-from-lambda",

"arn:aws:s3:::save-data-from-lambda/*"

]

}

]

}As you have probably noticed, the above policy can only perform actions that I have listed only in the S3 resource named save-data-from-lambda. You of course should change it and use the name of your S3 bucket.

Of course, if you need to do more things, you should add more actions to the IAM policy, but the one I created is enough to read and write files.

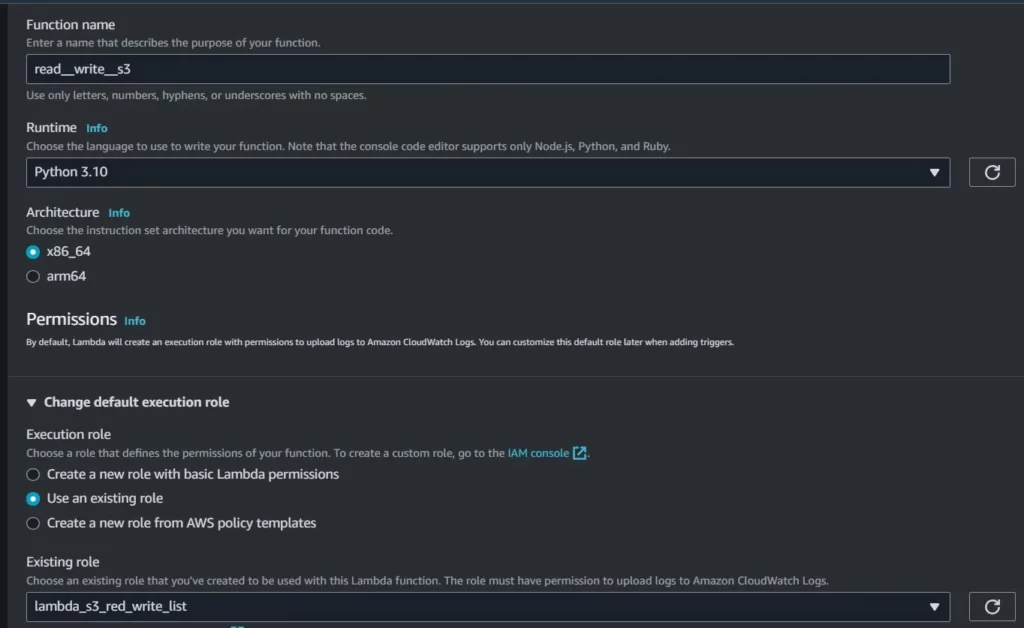

Lambda Function

Now the most important part of the project – the Lambda Function. After creating a place where you will be able to save and read files, and an IAM role that will allow you to do all these things, you can start creating the Lambda function.

First, the most difficult part, which takes the most time, which is coming up with some super cool name for the lambda function;) Then it will be easier. Just select the environment, in my case Python 3.10 and the IAM role that was created in the previous point. In my case lambda_s3_red_write_list.

Below you will find the code that allows you to write and read list on the S3. In the video How to read write files on s3 using lambda function – python & boto3 | AWS 2023 – YouTube I give more examples and a more detailed description of the Lambda function. If you are interested, I invite you to watch.

import json

import boto3

def lambda_handler(event, context):

bucket_name = "save-data-from-lambda"

list001 = ['this', 'is', 'first', 'list']

s3_path_001 = "001"

list002 = ['this', 'is', 'second', 'list']

s3_path_002 = "002"

new_list = list()

s3_client = boto3.client('s3', 'eu-central-1')

# save to s3

save_to_s3 = s3_client.put_object(

Key=s3_path_001,

Bucket=bucket_name,

Body=(json.dumps(list001).encode('UTF-8'))

)

save_to_s3 = s3_client.put_object(

Key=s3_path_002,

Bucket=bucket_name,

Body=(json.dumps(list002).encode('UTF-8'))

)

# read from s3

s3_bucket = s3_client.list_objects_v2(

Bucket=bucket_name

)

for content in s3_bucket["Contents"]:

file = content["Key"]

read_from_s3 = s3_client.get_object(

Key=file,

Bucket=bucket_name

)

new_list.append(read_from_s3["Body"].read().decode('UTF-8'))

print(new_list)

return(new_list)

You can easily adapt the code to your needs by changing the values of the variables at the beginning. You should definitely change the name of the S3 bucket, which is in the bucket_name variable.

Summary

As you have noticed, saving files to S3 and reading them is not difficult. It’s not just theory and something you’ll never use. This is a nice real-life example that you can see in various projects. Of course, I tried to simplify it a lot to make it easier to understand the topic. However, despite this, it is a great base for adding more services to it and expanding it accordingly.

If you are interested in the cloud, check out my other articles AWS – Wojciech Lepczyński – DevOps Cloud Architect (lepczynski.it)

I also strongly encourage you to visit my YouTube channel and subscribe